Table of Contents

ToggleIn the bustling world of computers, data transfer is like a high-speed relay race, where bits and bytes sprint from one hardware part to another. Ever wondered how that magic happens? It’s not just a bunch of wires and circuits throwing a party; it’s a finely tuned operation that keeps everything running smoothly.

Overview of Data Transfer in Computers

Data transfer in computers occurs through various channels that connect hardware components. Internal buses transport data within the system, enabling communication between the processor, memory, and storage devices. Each bus has a specific width, determining how many bits it can transfer simultaneously.

Parallel buses transmit multiple bits simultaneously, enhancing speed. Serial buses, on the other hand, send data one bit at a time, optimizing for efficiency over distance. Different transfer protocols, such as PCIe and SATA, dictate how data is exchanged, ensuring compatibility between devices.

Data moves in packets organized by protocols, with each packet containing essential information like destination and error-checking codes. Upon arrival at its destination, a feedback mechanism confirms the successful reception of data, minimizing errors.

Memory hierarchy impacts data transfer speed significantly. Cache memory provides the fastest access, while main memory and storage devices can introduce delays. This hierarchy ensures critical data remains accessible quickly, amplifying overall system performance.

Devices communicate using controlled signals, such as voltage changes, indicating data transmission status. These signals help maintain synchronization, crucial for parallel operations. Transfer rates vary, encompassing factors like cable quality and electromagnetic interference, which can impact data integrity.

Multiple hardware components, such as the CPU, RAM, and GPU, participate in the data transfer process. The coordination between these parts ensures seamless operation during tasks like rendering graphics or processing complex calculations. Understanding this intricate system illuminates the sophistication behind modern computer functionality.

Types of Data Transfer Methods

Data transfer methods in computers are essential for communication between hardware components. Understanding these methods ensures better knowledge of how data moves efficiently within a system.

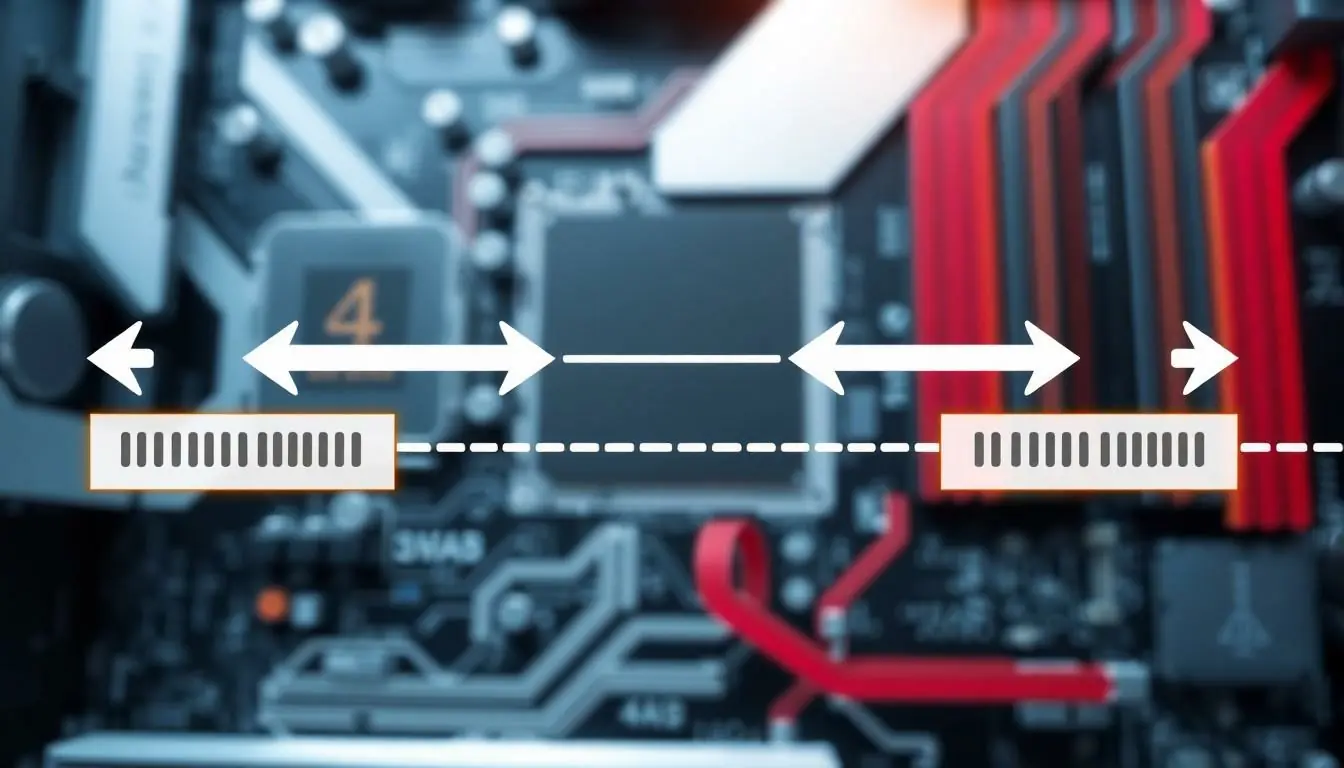

Parallel Data Transfer

Parallel data transfer allows multiple bits to travel simultaneously across several channels. This method leverages multiple data lines to enhance speed, making it ideal for short distances. Common usage of parallel transfer includes connections within a computer’s motherboard, often involving components like RAM and CPUs. With a lower latency, this transfer type boosts performance in critical operations. However, it tends to face limitations over longer distances due to signal degradation risks.

Serial Data Transfer

Serial data transfer sends data one bit at a time through a single channel. This method prioritizes reliability and minimizes interference, making it suitable for longer distances. Often employed in modern interfaces like USB and SATA, serial transfer adapts well to evolving technology. The simplicity of using fewer wires lowers costs and complexity in physical designs. Though slower than parallel transfer in terms of raw speed, serial methods compensate with higher efficiency and reliability for data transmission.

Hardware Components Involved

Data transfer relies on key hardware components coordinating seamlessly. Each part plays a vital role in ensuring efficient communication and processing.

Central Processing Unit (CPU)

The Central Processing Unit acts as the brain in the computer system. It performs calculations and executes instructions at incredible speeds. Signals travel between the CPU and other components via internal buses, ensuring fast data exchange. Processing occurs rapidly, enabling the execution of complex tasks like gaming or data analysis. CPUs leverage cache memory to access frequently used data quickly, drastically reducing latency. As a result, the efficiency of data transfer depends heavily on the CPU’s speed and architecture.

Memory (RAM)

Random Access Memory acts as a temporary data storage area that the CPU uses while processing tasks. Its design allows for quick read and write operations, making it vital for system performance. Data moves to and from RAM using buses, placing it in close proximity to the CPU. Accessing RAM directly supports multitasking, enabling smooth transitions between applications. The size and speed of RAM influence overall data transfer efficiency significantly. Inadequate RAM often leads to bottlenecks, affecting application performance and user experience.

Storage Devices

Storage devices maintain data long-term, ensuring information persists even when the computer powers down. Hard Disk Drives and Solid State Drives serve as primary storage solutions, each with unique characteristics. Data transfer from storage to RAM occurs through interfaces like SATA or NVMe, which govern speed and compatibility. SSDs, for instance, support faster transfer rates due to their lack of moving parts. The choice of storage directly impacts load times and data retrieval speeds, further emphasizing the importance of selecting appropriate storage solutions for optimal performance.

Data Transfer Protocols

Data transfer protocols serve as vital guidelines regulating how data moves between hardware components. These protocols ensure that communication occurs smoothly, consistently, and efficiently across a computer system.

Common Protocols Explained

PCI Express (PCIe) stands out as a high-speed interface used for connecting graphics cards, SSDs, and other devices. It operates using point-to-point connections, allowing multiple lanes for data transmission. Serial ATA (SATA) connects hard drives and SSDs, prioritizing reliable data transfers at moderate speeds. Universal Serial Bus (USB) permits the communication between peripherals and computers, supporting a range of devices from keyboards to external drives. Ethernet facilitates networking by allowing computers to communicate over local area networks, ensuring data is sent and received swiftly. Each protocol has distinct characteristics suited for various applications.

Importance of Protocols in Data Transfer

Protocols play a critical role in ensuring device compatibility. They specify data formatting, transmission speed, and connection establishment, allowing different hardware components to work together. Without these protocols, data transfers would encounter errors and inefficiencies. A defined protocol fosters synchronization, helping maintain the integrity of data as it moves across components. In addition, they handle error detection and correction, enhancing reliability during data exchanges. Standards set by protocols enable scalability, supporting future devices and technologies seamlessly. Their significance cannot be overstated; they underpin the entire data exchange process within systems, supporting rapid communication essential for modern computing.

Factors Affecting Data Transfer

Data transfer efficiency hinges on several factors, significantly impacting how swiftly information travels between hardware components.

Bandwidth Considerations

Bandwidth measures the maximum data transfer rate across a communication channel. Higher bandwidth allows more data to flow simultaneously, enhancing overall performance. For example, a PCIe 4.0 interface offers a bandwidth of 64 GB/s per lane, while USB 3.2 provides 20 GB/s. Adequate bandwidth is essential for applications demanding high-speed data access, such as gaming or video editing. Insufficient bandwidth can lead to congestion, impairing data transmission and increasing load times. Consequently, selecting interfaces with sufficient bandwidth aligns with the demands of specific tasks.

Latency Impact

Latency represents the delay between data request and response. It affects the time it takes for a signal to travel between hardware components. For instance, accessing data from RAM involves lower latency compared to retrieving it from an HDD, which may take several milliseconds. Factors contributing to latency include signal propagation time, processing delays, and protocol overhead. Minimizing latency is crucial for applications like online gaming or real-time data processing. It ensures responsiveness and seamless performance, emphasizing the importance of choosing hardware that balances bandwidth and latency for optimal data transfer efficiency.

Understanding data transfer in computers reveals the intricate dance between hardware components. Each element plays a vital role in ensuring that data moves swiftly and accurately. The combination of various transfer methods and protocols highlights the sophistication of modern computing systems.

As technology evolves the need for efficient data transfer becomes even more critical. With the right hardware and protocols in place users can experience seamless performance across applications. Ultimately the efficiency of data transfer not only enhances user experience but also drives innovation in computing technology.